Comprehensive benchmark of 16 on-device speech-to-text models across 9 inference engines on Android, iOS, macOS, and Windows

Source Code:

- android-offline-transcribe — Android benchmark app with 16 ASR models and 6 inference engines

- ios-mac-offline-transcribe — iOS and macOS benchmark app with 17 ASR models and 9 inference engines

- windows-offline-transcribe — Windows benchmark app with 12 ASR models, CPU-only

Abstract

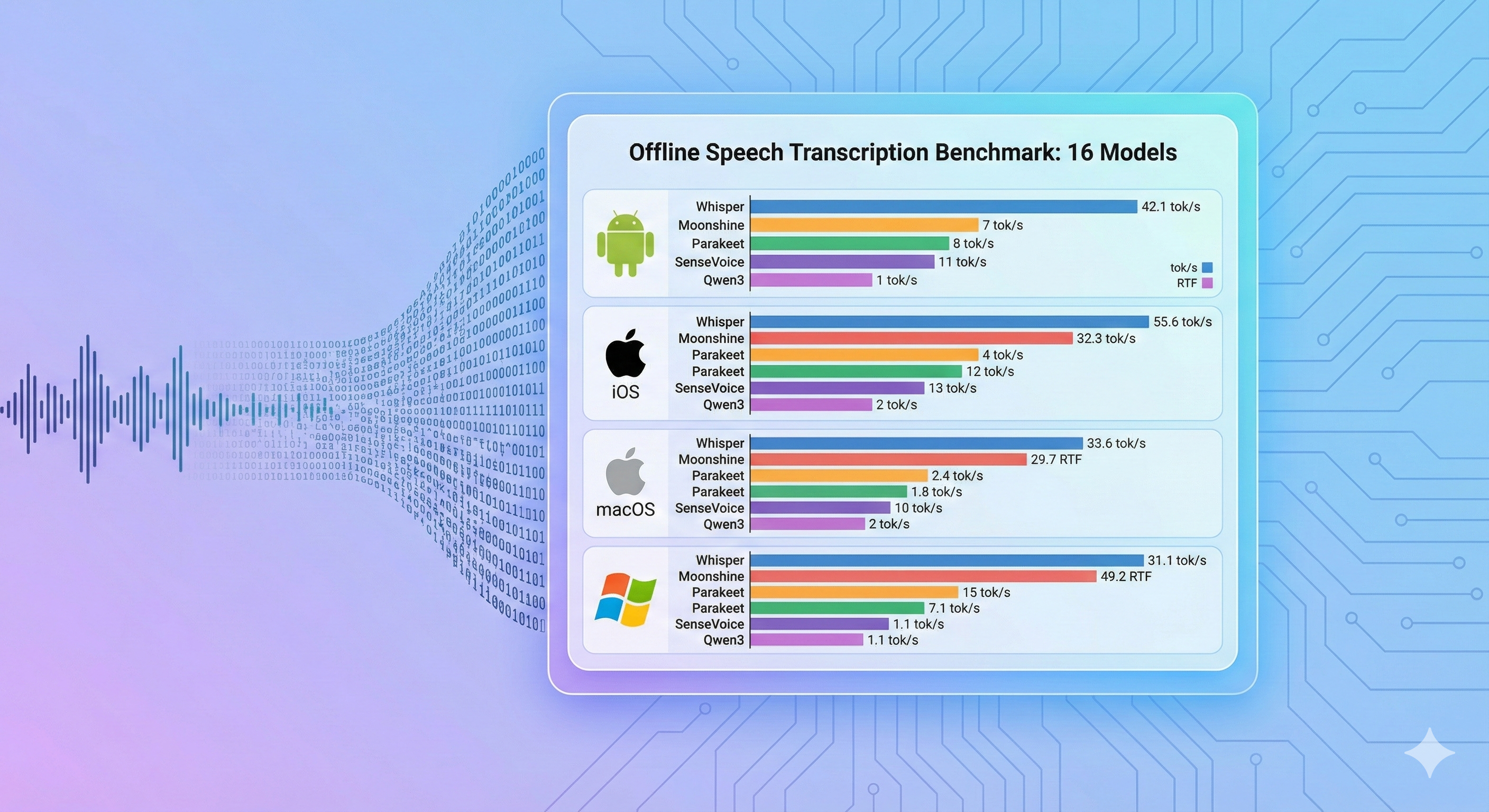

We benchmark 16 on-device speech-to-text models across 9 inference engines on Android, iOS, macOS, and Windows, measuring inference speed (tok/s), real-time factor (RTF), and memory footprint. This benchmark measures speed only — transcription accuracy (WER/CER) is not evaluated. Key findings: the choice of inference engine can change performance by 51x for the same model (sherpa-onnx vs whisper.cpp on Android); Moonshine Tiny and SenseVoice Small achieve the fastest inference across platforms; and WhisperKit CoreML crashes on 4 GB iOS devices for models above Whisper Tiny. All benchmark apps and results are open-source.

Motivation

Developers building voice-enabled edge applications face a combinatorial selection problem: dozens of ASR models (from 31 MB Whisper Tiny to 1.8 GB Qwen3 ASR), multiple inference engines (ONNX Runtime, CoreML, whisper.cpp, MLX), and 4+ target platforms — each combination producing vastly different speed and memory characteristics. Published model benchmarks typically report results on server GPUs, not the consumer mobile and laptop hardware where these models actually deploy.

This benchmark addresses the deployment choice problem directly: which model + engine combination delivers real-time transcription on each target platform, and what are the memory constraints? The results enable developers to select a model/engine pair based on measured data from their target device class, rather than extrapolating from GPU benchmarks.

Methodology

Android, iOS, and macOS benchmarks use the same 30-second WAV file containing a looped segment of the JFK inaugural address (16 kHz, mono, PCM 16-bit). Windows benchmarks use an 11-second excerpt from the same source (see Windows section for details).

Metrics (speed-only — this benchmark does not measure transcription accuracy/WER):

- Inference: Wall-clock time from engine call to result

- tok/s: Output words per second (higher = faster)

- RTF: Real-Time Factor — ratio of processing time to audio duration (below 1.0 = faster than real-time)

Devices:

| Device | Chip | RAM | OS |

|---|---|---|---|

| Samsung Galaxy S10 | Exynos 9820 | 8 GB | Android 12 (API 31) |

| iPad Pro 3rd gen | A12X Bionic | 4 GB | iOS 17+ |

| MacBook Air | Apple M4 | 32 GB | macOS 15+ |

| Laptop | Intel Core i5-1035G1 | 8 GB | Windows (CPU-only) |

Android Results

Device: Samsung Galaxy S10, Android 12, API 31

| Model | Engine | Params | Size | Languages | Inference | tok/s | RTF | Result |

|---|---|---|---|---|---|---|---|---|

| Moonshine Tiny | sherpa-onnx | 27M | ~125 MB | English | 1,363 ms | 42.55 | 0.05 | ✅ |

| SenseVoice Small | sherpa-onnx | 234M | ~240 MB | zh/en/ja/ko/yue | 1,725 ms | 33.62 | 0.06 | ✅ |

| Whisper Tiny | sherpa-onnx | 39M | ~100 MB | 99 languages | 2,068 ms | 27.08 | 0.07 | ✅ |

| Moonshine Base | sherpa-onnx | 61M | ~290 MB | English | 2,251 ms | 25.77 | 0.08 | ✅ |

| Parakeet TDT 0.6B v3 | sherpa-onnx | 600M | ~671 MB | 25 European | 2,841 ms | 20.41 | 0.09 | ✅ |

| Android Speech (Offline) | SpeechRecognizer | System | Built-in | 50+ languages | 3,615 ms | 1.38 | 0.12 | ✅ |

| Android Speech (Online) | SpeechRecognizer | System | Built-in | 100+ languages | 3,591 ms | 1.39 | 0.12 | ✅ |

| Zipformer Streaming | sherpa-onnx streaming | 20M | ~73 MB | English | 3,568 ms | 16.26 | 0.12 | ✅ |

| Whisper Base (.en) | sherpa-onnx | 74M | ~160 MB | English | 3,917 ms | 14.81 | 0.13 | ✅ |

| Whisper Base | sherpa-onnx | 74M | ~160 MB | 99 languages | 4,038 ms | 14.36 | 0.13 | ✅ |

| Whisper Small | sherpa-onnx | 244M | ~490 MB | 99 languages | 12,329 ms | 4.70 | 0.41 | ✅ |

| Qwen3 ASR 0.6B (ONNX) | ONNX Runtime INT8 | 600M | ~1.9 GB | 30 languages | 15,881 ms | 3.65 | 0.53 | ✅ |

| Whisper Turbo | sherpa-onnx | 809M | ~1.0 GB | 99 languages | 17,930 ms | 3.23 | 0.60 | ✅ |

| Whisper Tiny (whisper.cpp) | whisper.cpp GGML | 39M | ~31 MB | 99 languages | 105,596 ms | 0.55 | 3.52 | ✅ |

| Qwen3 ASR 0.6B (CPU) | Pure C/NEON | 600M | ~1.8 GB | 30 languages | 338,261 ms | 0.17 | 11.28 | ✅ |

| Omnilingual 300M | sherpa-onnx | 300M | ~365 MB | 1,600+ languages | 44,035 ms | 0.05 | 1.47 | ❌ |

15/16 PASS — 0 OOM conditions. The sole failure (Omnilingual 300M) is a known model limitation with English language detection.

Android Engine Comparison: Same Model, Different Backends

The Whisper Tiny model shows dramatic performance differences depending on the inference backend:

| Backend | Inference | tok/s | Speedup |

|---|---|---|---|

| sherpa-onnx (ONNX) | 2,068 ms | 27.08 | 51x |

| whisper.cpp (GGML) | 105,596 ms | 0.55 | 1x (baseline) |

sherpa-onnx is 51x faster than whisper.cpp for the same Whisper Tiny model on Android — a critical finding for developers choosing an inference runtime.

iOS Results

Device: iPad Pro 3rd gen, A12X Bionic, 4 GB RAM

| Model | Engine | Params | Size | Languages | tok/s | Status |

|---|---|---|---|---|---|---|

| Parakeet TDT v3 | FluidAudio (CoreML) | 600M | ~600 MB (CoreML) | 25 European | 181.8 | ✅ |

| Zipformer 20M | sherpa-onnx streaming | 20M | ~46 MB (INT8) | English | 39.7 | ✅ |

| Whisper Tiny | whisper.cpp | 39M | ~31 MB (GGML Q5_1) | 99 languages | 37.8 | ✅ |

| Moonshine Tiny | sherpa-onnx offline | 27M | ~125 MB (INT8) | English | 37.3 | ✅ |

| Moonshine Base | sherpa-onnx offline | 61M | ~280 MB (INT8) | English | 31.3 | ✅ |

| Whisper Base | WhisperKit (CoreML) | 74M | ~150 MB (CoreML) | English | 19.6 | ❌ OOM on 4 GB |

| SenseVoice Small | sherpa-onnx offline | 234M | ~240 MB (INT8) | zh/en/ja/ko/yue | 15.6 | ✅ |

| Whisper Base | whisper.cpp | 74M | ~57 MB (GGML Q5_1) | 99 languages | 13.8 | ✅ |

| Whisper Small | WhisperKit (CoreML) | 244M | ~500 MB (CoreML) | 99 languages | 6.3 | ❌ OOM on 4 GB |

| Qwen3 ASR 0.6B | Pure C (ARM NEON) | 600M | ~1.8 GB | 30 languages | 5.6 | ✅ |

| Qwen3 ASR 0.6B (ONNX) | ONNX Runtime (INT8) | 600M | ~1.6 GB (INT8) | 30 languages | 5.4 | ✅ |

| Whisper Tiny | WhisperKit (CoreML) | 39M | ~80 MB (CoreML) | 99 languages | 4.5 | ✅ |

| Whisper Small | whisper.cpp | 244M | ~181 MB (GGML Q5_1) | 99 languages | 3.9 | ✅ |

| Whisper Large v3 Turbo (compressed) | WhisperKit (CoreML) | 809M | ~1 GB (CoreML) | 99 languages | 1.9 | ❌ OOM on 4 GB |

| Whisper Large v3 Turbo | WhisperKit (CoreML) | 809M | ~600 MB (CoreML) | 99 languages | 1.4 | ❌ OOM on 4 GB |

| Whisper Large v3 Turbo | whisper.cpp | 809M | ~547 MB (GGML Q5_0) | 99 languages | 0.8 | ⚠️ RTF >1 |

| Whisper Large v3 Turbo (compressed) | whisper.cpp | 809M | ~834 MB (GGML Q8_0) | 99 languages | 0.8 | ⚠️ RTF >1 |

WhisperKit OOM warning: On 4 GB devices, WhisperKit CoreML crashes (OOM) for Whisper Base and above. The tok/s values shown for OOM models were measured before the crash occurred and do not represent complete successful runs. whisper.cpp handles the same models without OOM, though at lower throughput.

macOS Results

Device: MacBook Air M4, 32 GB RAM

| Model | Engine | Params | Size | Languages | tok/s | Status |

|---|---|---|---|---|---|---|

| Parakeet TDT v3 | FluidAudio (CoreML) | 600M | ~600 MB (CoreML) | 25 European | 171.6 | ✅ |

| Moonshine Tiny | sherpa-onnx offline | 27M | ~125 MB (INT8) | English | 92.2 | ✅ |

| Zipformer 20M | sherpa-onnx streaming | 20M | ~46 MB (INT8) | English | 77.4 | ✅ |

| Moonshine Base | sherpa-onnx offline | 61M | ~280 MB (INT8) | English | 59.3 | ✅ |

| SenseVoice Small | sherpa-onnx offline | 234M | ~240 MB (INT8) | zh/en/ja/ko/yue | 27.4 | ✅ |

| Whisper Tiny | WhisperKit (CoreML) | 39M | ~80 MB (CoreML) | 99 languages | 24.7 | ✅ |

| Whisper Base | WhisperKit (CoreML) | 74M | ~150 MB (CoreML) | English | 23.3 | ✅ |

| Apple Speech | SFSpeechRecognizer | System | Built-in | 50+ languages | 13.1 | ✅ |

| Whisper Small | WhisperKit (CoreML) | 244M | ~500 MB (CoreML) | 99 languages | 8.7 | ✅ |

| Qwen3 ASR 0.6B (ONNX) | ONNX Runtime (INT8) | 600M | ~1.6 GB (INT8) | 30 languages | 8.0 | ✅ |

| Qwen3 ASR 0.6B | Pure C (ARM NEON) | 600M | ~1.8 GB | 30 languages | 5.7 | ✅ |

| Whisper Large v3 Turbo | WhisperKit (CoreML) | 809M | ~600 MB (CoreML) | 99 languages | 1.9 | ✅ |

| Whisper Large v3 Turbo (compressed) | WhisperKit (CoreML) | 809M | ~1 GB (CoreML) | 99 languages | 1.5 | ✅ |

| Qwen3 ASR 0.6B (MLX) | MLX (Metal GPU) | 600M | ~400 MB (4-bit) | 30 languages | — | Not benchmarked |

| Omnilingual 300M | sherpa-onnx offline | 300M | ~365 MB (INT8) | 1,600+ languages | 0.03 | ❌ English broken |

macOS has no OOM issues — with 32 GB RAM, all models including Whisper Large v3 Turbo run successfully via WhisperKit CoreML.

Windows Results

Device: Intel Core i5-1035G1 @ 1.00 GHz (4C/8T), 8 GB RAM, CPU-only

| Model | Engine | Params | Size | Inference | Words/s | RTF |

|---|---|---|---|---|---|---|

| Moonshine Tiny | sherpa-onnx offline | 27M | ~125 MB | 435 ms | 50.6 | 0.040 |

| SenseVoice Small | sherpa-onnx offline | 234M | ~240 MB | 462 ms | 47.6 | 0.042 |

| Moonshine Base | sherpa-onnx offline | 61M | ~290 MB | 534 ms | 41.2 | 0.049 |

| Parakeet TDT v2 | sherpa-onnx offline | 600M | ~660 MB | 1,239 ms | 17.8 | 0.113 |

| Zipformer 20M | sherpa-onnx streaming | 20M | ~73 MB | 1,775 ms | 12.4 | 0.161 |

| Whisper Tiny | whisper.cpp | 39M | ~80 MB | 2,325 ms | 9.5 | 0.211 |

| Omnilingual 300M | sherpa-onnx offline | 300M | ~365 MB | 2,360 ms | — | 0.215 |

| Whisper Base | whisper.cpp | 74M | ~150 MB | 6,501 ms | 3.4 | 0.591 |

| Qwen3 ASR 0.6B | qwen-asr (C) | 600M | ~1.9 GB | 13,359 ms | 1.6 | 1.214 |

| Whisper Small | whisper.cpp | 244M | ~500 MB | 21,260 ms | 1.0 | 1.933 |

| Whisper Large v3 Turbo | whisper.cpp | 809M | ~834 MB | 92,845 ms | 0.2 | 8.440 |

| Windows Speech | Windows Speech API | N/A | 0 MB | — | — | — |

Tested with an 11-second JFK inauguration audio excerpt (22 words). All models run CPU-only on x86_64 (i5-1035G1, 4C/8T) — no GPU acceleration. Parakeet TDT v2 is notable for combining fast inference (17.8 words/s) with full punctuation output.

Limitations

- Speed only: This benchmark measures inference speed, not transcription accuracy (WER/CER). Accuracy varies by model, language, and audio conditions — developers should evaluate accuracy separately for their target use case.

- Single audio sample: All platforms use a single JFK inaugural address recording. Results may differ with other audio characteristics (noise, accents, domain-specific vocabulary).

- Windows clip length: Windows uses an 11-second audio clip vs 30 seconds on other platforms, so cross-platform speed comparisons should account for this difference.

- iOS OOM values: The tok/s values shown for OOM-marked iOS models were measured before the crash and do not represent complete successful runs.

Further Research

- Add accuracy benchmarks: Pair speed metrics with WER/CER across multilingual datasets and noisy speech conditions.

- Expand audio conditions: Add long-form audio, overlapping speakers, and domain vocabulary (meetings, call-center, industrial) instead of one speech sample.

- Quantization sweep: Benchmark INT8/INT4 and mixed-precision variants across engines to map memory/speed/accuracy trade-offs.

- Smaller Qwen3-ASR variants: Include Qwen3-ASR-0.6B and any future sub-1B checkpoints (for example, a potential 0.8B release) to test whether speed gains justify quality loss.

- Power and thermal profiling: Add battery drain and sustained-performance measurements for continuous on-device transcription workloads.

Conclusion

On-device speech transcription achieves faster-than-real-time speeds across all major mobile and desktop platforms. The choice of inference engine matters as much as model selection — sherpa-onnx on Android and CoreML on Apple deliver 10–50x speedups over naive CPU inference. Among the fastest models, Moonshine Tiny (English) or Whisper Tiny (multilingual) with the right engine achieves real-time transcription at minimal memory cost. Model selection should also consider transcription accuracy (WER), which was not measured in this benchmark.

References

Our Repositories:

- android-offline-transcribe — Android benchmark app (Apache 2.0)

- ios-mac-offline-transcribe — iOS/macOS benchmark app (Apache 2.0)

- windows-offline-transcribe — Windows benchmark app (Apache 2.0)

Models:

- Moonshine Tiny/Base — Useful Sensors, English-only

- Whisper Tiny/Base/Small/Turbo — OpenAI, 99 languages

- SenseVoice Small — FunAudioLLM, 5 languages

- Parakeet TDT 0.6B v3 — NVIDIA NeMo, 25 European languages

- Qwen3 ASR 0.6B — Alibaba Qwen, 30 languages

- Zipformer Streaming — Next-gen Kaldi, English

- Omnilingual 300M — MMS, 1,600+ languages

Inference Engines:

- sherpa-onnx — Next-gen Kaldi ONNX Runtime

- whisper.cpp — C/C++ port of OpenAI Whisper

- WhisperKit — CoreML Whisper for Apple

- MLX — Apple Machine Learning framework